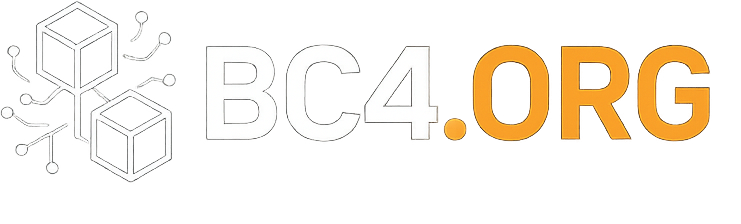

The recent deployment of Sora 2 has triggered unprecedented digital disruption across online platforms, with its integrated audio capabilities and social collaboration features becoming immediate catalysts for widespread meme generation. Within mere hours of release, internet communities transformed the platform into an experimental testing ground, pushing the boundaries of content moderation systems to their limits.

The rapid proliferation of user-generated content has raised significant questions about digital identity protection and intellectual property rights in real-time content creation environments. Platform administrators and content moderators found themselves grappling with unprecedented volumes of generated media, highlighting the growing challenges in maintaining digital decorum amid rapidly evolving content creation tools.

Industry observers noted the deployment revealed critical vulnerabilities in existing content moderation frameworks, particularly regarding rapid-response mechanisms for inappropriate content. The incident has sparked renewed discussions among technology ethicists and legal experts about the adequacy of current digital rights protection measures in an era of instantaneous content generation and distribution.

The situation continues to evolve as platform developers work to implement more robust moderation protocols while balancing creative expression with responsible content governance.